The Python ecosystem#

Why Python?#

Python in a nutshell#

Python is a multi-purpose programming language created in 1989 by Guido van Rossum and developed under a open source license.

It has the following characteristics:

multi-paradigms (procedural, fonctional, object-oriented);

dynamic types;

automatic memory management;

and much more!

The Python syntax#

For more examples, see the Python cheatsheet.

def hello(name):

print(f"Hello, {name}")

friends = ["Lou", "David", "Iggy"]

for friend in friends:

hello(friend)

Hello, Lou

Hello, David

Hello, Iggy

Introduction to Data Science#

Main objective: extract insight from data.

Expression born in 1997 in the statistician community.

“A Data Scientist is a statistician that lives in San Francisco”.

2012 : “Sexiest job of the 21st century” (Harvard Business Review).

Controversy on the expression’s real usefulness.

Python, a standard for ML and Data Science#

Language qualities (ease of use, simplicity, versatility).

Involvement of the scientific and academical communities.

Rich ecosystem of dedicated open source libraries.

Essential Python tools#

Anaconda#

Anaconda is a scientific distribution including Python and many (1500+) specialized packages. it is the easiest way to setup a work environment for ML and Data Science with Python.

Jupyter Notebook#

The Jupyter Notebook is an open-source web application that allows to manage documents (.ipynb files) that may contain live code, equations, visualizations and text.

It has become the de facto standard for sharing research results in numerical fields.

Google Colaboratory#

Cloud environment for executing Jupyter notebooks through CPU, GPU or TPU.

NumPy#

NumPy is a Python library providing support for multi-dimensional arrays, along with a large collection of mathematical functions to operate on these arrays.

It is the fundamental package for scientific computing in Python.

# Import the NumPy package under the alias "np"

import numpy as np

x = np.array([1, 4, 2, 5, 3])

print(x[:2])

print(x[2:])

print(np.sort(x))

[1 4]

[2 5 3 2]

[1 2 2 3 4 5]

pandas#

pandas is a Python library providing high-performance, easy-to-use data structures and data analysis tools.

The primary data structures in pandas are implemented as two classes:

DataFrame, which you can imagine as a relational data table, with rows and named columns.

Series, which is a single column. A DataFrame contains one or more Series and a name for each Series.

The DataFrame is a commonly used abstraction for data manipulation.

import pandas as pd

# Create a DataFrame object contraining two Series

pop = pd.Series({"CAL": 38332521, "TEX": 26448193, "NY": 19651127})

area = pd.Series({"CAL": 423967, "TEX": 695662, "NY": 141297})

pd.DataFrame({"population": pop, "area": area})

| population | area | |

|---|---|---|

| CAL | 38332521 | 423967 |

| TEX | 26448193 | 695662 |

| NY | 19651127 | 141297 |

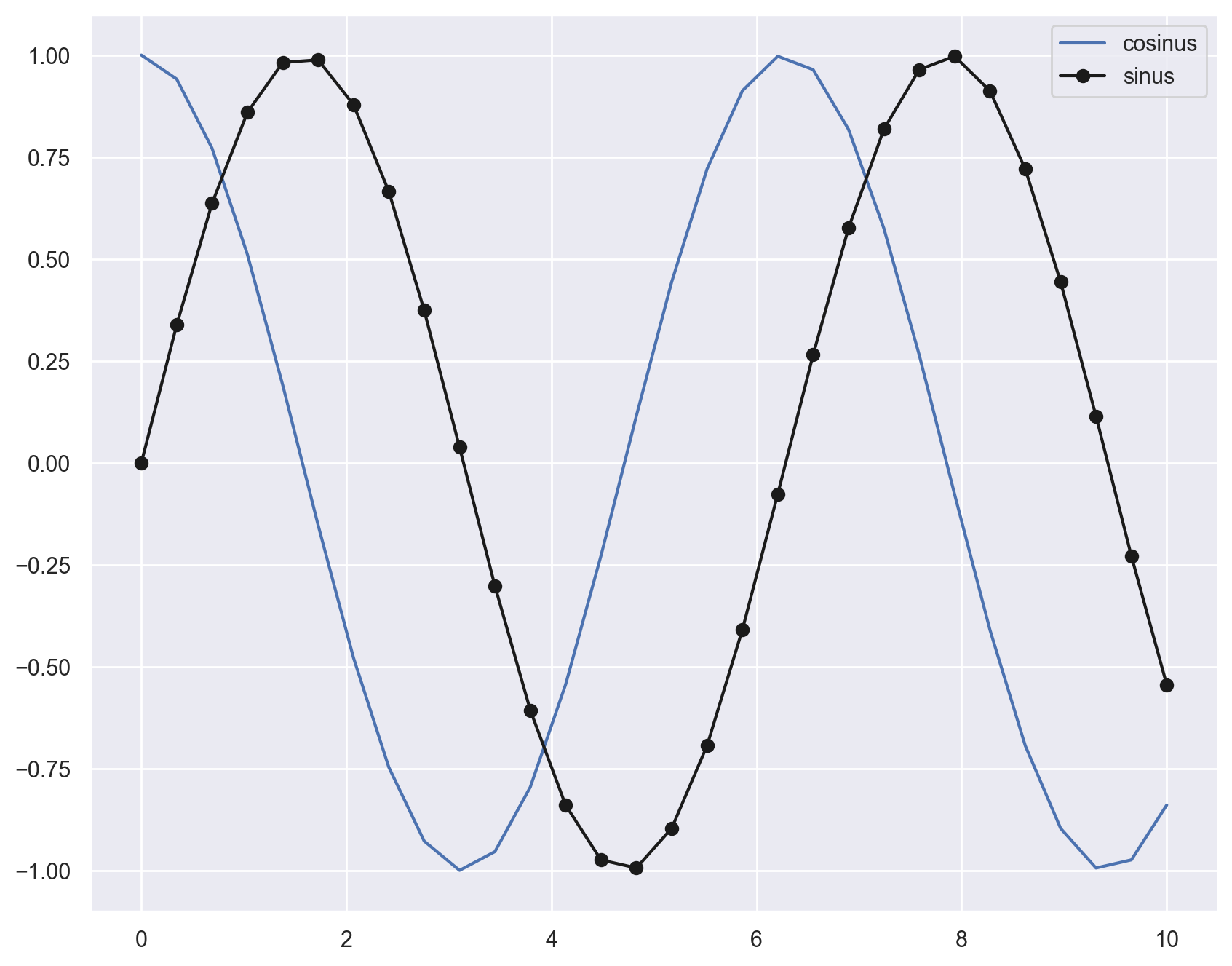

Matplotlib and Seaborn#

Matplotlib is a Python library for 2D plotting. Seaborn is another visualization library that improves presentation of matplotlib-generated graphics.

import matplotlib.pyplot as plt

import seaborn as sns

# Setup plots (should be done on a separate cell for better results)

%matplotlib inline

plt.rcParams["figure.figsize"] = 10, 8

%config InlineBackend.figure_format = "retina"

sns.set()

# Plot a single function

x = np.linspace(0, 10, 30)

plt.plot(x, np.cos(x), label="cosinus")

plt.plot(x, np.sin(x), '-ok', label="sinus")

plt.legend()

plt.show()

scikit-learn#

scikit-learn is a multi-purpose library built over Numpy and Matplotlib and providing dozens of built-in ML algorithms and models.

It is the Swiss army knife of Machine Learning.

Fun fact: scikit-learn was originally created by INRIA.

TensorFlow and Keras#

Keras is a high-level, user-friendly API for creating and training neural nets.

Once compatible with many back-end tools (Caffe, Theano, CNTK…), Keras is now the official high-level API of TensorFlow, Google’s Machine Learning platform.

The 2.3.0 release (Sept. 2019) was the last major release of multi-backend Keras.

See this notebook for a introduction to TF+Keras.

PyTorch#

PyTorch is a Machine Learning platform supported by Facebook and competing with TensorFlow for the hearts and minds of ML practitioners worldwide. It provides:

a array manipulation API similar to NumPy;

an autodifferentiation engine for computing gradients;

a neural network API.